[ad_1]

A case research of the assembly level between sport idea and elementary ideas in machine studying

Final semester, I took a seminar in Incentives and Studying. The papers we mentioned all through the course handled the overlap between the fields of sport idea and machine studying. I had little or no familiarity with formal sport idea beforehand, and I assumed discovering out extra about it by way of the lens of its intersection with machine studying was fascinating. By the top of this text, I hope you’ll assume so, too!

The paper my group selected to current was PAC-Studying for Strategic Classification (Sundaram, Vullikanti, Xu, & Yao, 2021). It generalizes the essential machine studying notion of PAC studying to work in a strategic binary classification setting. The phrase “strategic” right here signifies that the information factors we need to classify aren’t simply information factors, however quite characterize rational brokers with their very own particular person preferences.

This will likely be a three-part sequence on my takeaways from the paper. On this article, I’ll lay the intuitive and formal foundations required to know the strategic classification mannequin and setup. Within the subsequent one, I’ll cowl the idea of strategic VC dimension as a generalization of the canonical notion of VC dimension. The ultimate submit will likely be a walkthrough of my favourite proof within the paper, which can tie collectively the definitions and concepts launched within the first two.

An understanding the thought of binary classification and the essential notation used for it within the context of machine studying must be all it is advisable perceive the articles on this sequence. In the end, the aim is to current the ideas in a method that makes them as approachable as attainable, no matter your background.

Why Strategic Classification Is Helpful: Motivation

Binary classification is a cornerstone of machine studying. It was the primary matter I used to be taught after I took an introductory course on the topic; the real-world instance we examined again then was the issue of classifying emails as both spam or not spam. Different widespread examples embrace diagnosing a illness and screening resumes for a job posting.

The fundamental binary classification setup is intuitive and simply relevant to our day-to-day lives, and it could function a useful demonstration of the methods we are able to leverage machine studying to resolve human issues. However how typically will we cease to contemplate the truth that individuals normally have a vested curiosity within the classification final result of such issues? Spammers need their emails to make it by way of spam filters, not everybody desires their COVID take a look at to come back again optimistic, and job seekers could also be prepared to stretch the reality to attain an interview. The info factors aren’t simply information factors — they’re lively contributors within the classification course of, typically aiming to sport the system to their very own profit.

In mild of this, the canonical binary classification setup appears a bit simplistic. Nonetheless, the complexity of reexamining binary classification whereas tossing out the implicit assumption that the objects we want to classify are uninfluenced by exterior stakes sounds unmanageable. The preferences that would have an effect on the classification course of are available so many alternative types — how might we probably take all of them into account?

It seems that, below sure assumptions, we are able to. By way of a intelligent generalization of the canonical binary classification mannequin, the paper’s authors exhibit the feasibility of designing computationally-tractable, gaming-resistant classification algorithms.

From Knowledge Factors to Rational Brokers: Desire Lessons

First, if we need to be as life like as attainable, we now have to correctly contemplate the huge breadth of types that real-world preferences can take amongst rational brokers. The paper mentions 5 more and more common classes of preferences (which I’ll name desire lessons). The names I’ll use for them are my very own, however are based mostly on the terminology used within the paper.

- Neutral: No preferences, identical to in canonical binary classification.

- Homogeneous: Similar preferences throughout all of the brokers concerned. For instance, inside the set of people who find themselves prepared to fill out the paperwork crucial to use for a tax refund, we are able to fairly count on that everybody is equally motivated to get their a refund (i.e., to be labeled positively).

- Adversarial: Equally-motivated brokers intention to induce the alternative of their true labels. Consider bluffing in poker — a participant with a weak hand (negatively labeled) desires their opponents to assume they’ve a powerful hand (positively labeled), and vice versa. For the “equally-motivated” half, think about all gamers wager the identical quantity.

- Generalized Adversarial: Unequally-motivated brokers intention to induce the alternative of their true labels. This isn’t too totally different from the plain Adversarial case. Nonetheless, it must be straightforward to know how a participant with $100 {dollars} on the road can be prepared to go to larger lengths to deceive their opponents than a participant betting $1.

- Basic Strategic: “Something goes.” This desire class goals to embody any set of preferences possible. All 4 of the beforehand talked about desire lessons are strict subsets of this one. Naturally, this class is the principle focus of the paper, and many of the outcomes demonstrated within the paper apply to it. The authors give the fantastic instance of school functions, the place “college students [who] have heterogeneous preferences over universities […] could manipulate their software supplies in the course of the admission course of.”

How can the canonical classification setup be modified to account for such wealthy agent preferences? The reply is astoundingly easy. As a substitute of limiting our scope to (x, y) ∈ X × { -1, 1 }, we contemplate information factors of the shape (x, y, r) ∈ X × { -1, 1 } × R. A degree’s r worth represents its desire, which we are able to break down into two equally necessary elements:

- The signal of r signifies whether or not the information level desires to be positively or negatively labeled (r > 0 or r < 0, respectively).

- The absolute worth of r specifies how robust the information level’s desire is. For instance, an information level with r = 10 can be way more strongly motivated to control its function vector x to make sure it finally ends up being positively labeled than an information level with r = 1.

What determines the desire class we function inside is the set R. We will formally outline every of the aforementioned desire lessons by way of R and see how the formal definitions align with their intuitive descriptions and examples:

- Neutral: R = { 0 }. (This makes it abundantly clear that the strategic setup is only a generalization of the canonical setup.)

- Homogeneous: R = { 1 }.

- Adversarial: R = { -1, 1 }, with the added requirement that each one information factors favor to be labeled as the alternative of their true labels.

- Generalized Adversarial: R ⊆ ℝ (and all information factors favor to be labeled as the alternative of their true labels.)

- Basic Strategic: R ⊆ ℝ.

Giving Desire Magnitude Which means: Price Capabilities

Clearly, although, R by itself isn’t sufficient to assemble a whole common strategic framework. The very concept of an information level’s desire having a sure magnitude is meaningless with out tying it to the fee the information level incurs in manipulating its function vector. In any other case, any information level with a optimistic r, regardless of how small, would haven’t any motive to not manipulate its function vector advert infinitum. That is the place the idea of value features comes into play.

Let c: X × X → ℝ⁺. For simplicity, we are going to assume (because the paper’s authors do) that c is induced by seminorms. We are saying {that a} take a look at information level (x, y, r) could remodel its function vector x into z ∈ X with value c(z; x). It’s necessary to notice on this context that the paper assumes that the coaching information is unmanipulated.

We will divide value features into two classes, with the previous being a subset of the latter. An instance-invariant value perform is similar throughout all information factors. To place it extra formally:

∃ℓ: X × X → ℝ⁺ . ∀(x, y, r) ∈ X × { -1, 1 } × R . ∀z ∈ X . c(z; x) = ℓ(z – x)

I.e., there exists a perform ℓ such that for all information factors and all potential manipulated function vectors, c(z ; x) merely takes the worth of ℓ(z – x).

An instance-wise value perform could differ between information factors. Formally:

∀(x, y, r) ∈ X × { -1, 1 } × R . ∃ℓₓ: X × X → ℝ⁺ .∀z ∈ X . c(z; x) = ℓₓ(z – x)

I.e., every information level can have its personal perform, ℓₓ, and c(z; x) takes the worth of ℓₓ(z – x) for every particular person information level.

As we are going to see within the remaining article on this sequence, whereas the distinction between the 2 forms of value features could seem delicate, instance-wise value features are considerably extra expressive and tougher to study.

Desire Lessons and Price Capabilities in Motion: An Instance

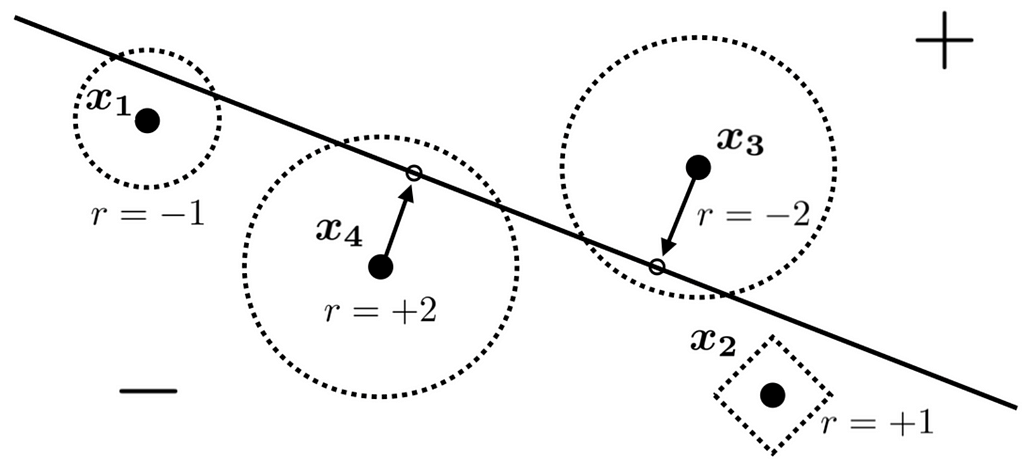

Let’s check out an instance given within the paper to assist hammer house the points of the setup we’ve lined so far.

On this instance, we now have a choice boundary induced by a linear binary classifier and 4 information factors with particular person preferences. Basic strategic is the one relevant desire class on this case.

The dotted perimeter round every xᵢ reveals the manipulated function vectors z to which it could value the purpose precisely 1 to maneuver. Since we assume the fee perform is induced by seminorms, all the pieces inside a fringe has a value of lower than 1 for the corresponding information level to maneuver to. We will simply inform that the fee perform on this instance varies from information level to information level, which suggests it’s instance-wise.

As we are able to see, the leftmost information level (x₁, -1, -1) has no incentive to cross the choice boundary since it’s on the unfavorable facet of the choice boundary whereas additionally having a unfavorable desire. (x₄, -1, 2), nevertheless, desires to be positively labeled, and because the reward for manipulating x₄ to cross the boundary (which is 2) outweighs the fee of doing so (which is lower than 1), it is smart to undergo with the manipulation. (x₃, 1, -2) is symmetric to (x₄, -1, 2), additionally deciding to control its function to attain its desired classification final result. Lastly, (x₂, -1, 1), the fee perform of which we are able to see is predicated on taxicab distance, opts to remain put no matter its desire to be positively labeled. It is because the price of manipulating x₂ to cross the choice boundary can be larger than 1, surpassing the reward the information level would stand to realize by doing so.

Assuming the brokers our information factors characterize are rational, we are able to very simply inform when an information level ought to manipulate its function vector (advantages outweigh prices) and when it shouldn’t (prices outweigh advantages). The subsequent step is to show our intuitive understanding into one thing extra formal.

Balancing Prices & Advantages: Defining Knowledge Level Greatest Response

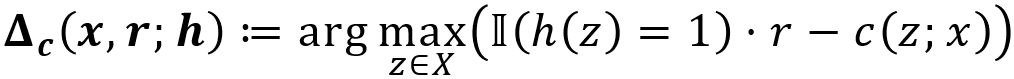

This leads us to outline the information level finest response:

So we’re searching for the function vector(s) z ∈ X that maximize… what precisely? Let’s break down the expression we’re aiming to maximise into extra manageable components.

- h: A given binary classifier (h: X → { -1, 1 }).

- c(z; x): As said above, this expresses the value of modifying the function vector x to be z.

- 𝕀(h(z) = 1): Right here, 𝕀(p) is the indicator perform, returning 1 if the predicate p is upheld or 0 if it isn’t. The predicate h(z) = 1 is true if the vector z into account is positively labeled by h. Placing that collectively, we discover that 𝕀(h(z) = 1) evaluates to 1 for any z that’s positively labeled. If r is optimistic, that’s good. If it’s unfavorable, that’s dangerous.

The underside-line is that we need to discover vector(s) z for which 𝕀(h(z) = 1) ⋅ r, which we are able to name the realized reward, outweighs the price of manipulating the unique x into z by as a lot as attainable. To place it in sport theoretic phrases, the information level finest response maximizes the utility of its corresponding agent within the context of the binary classification into account.

Placing It All Collectively: A Formal Definition of the Strategic Classification Drawback

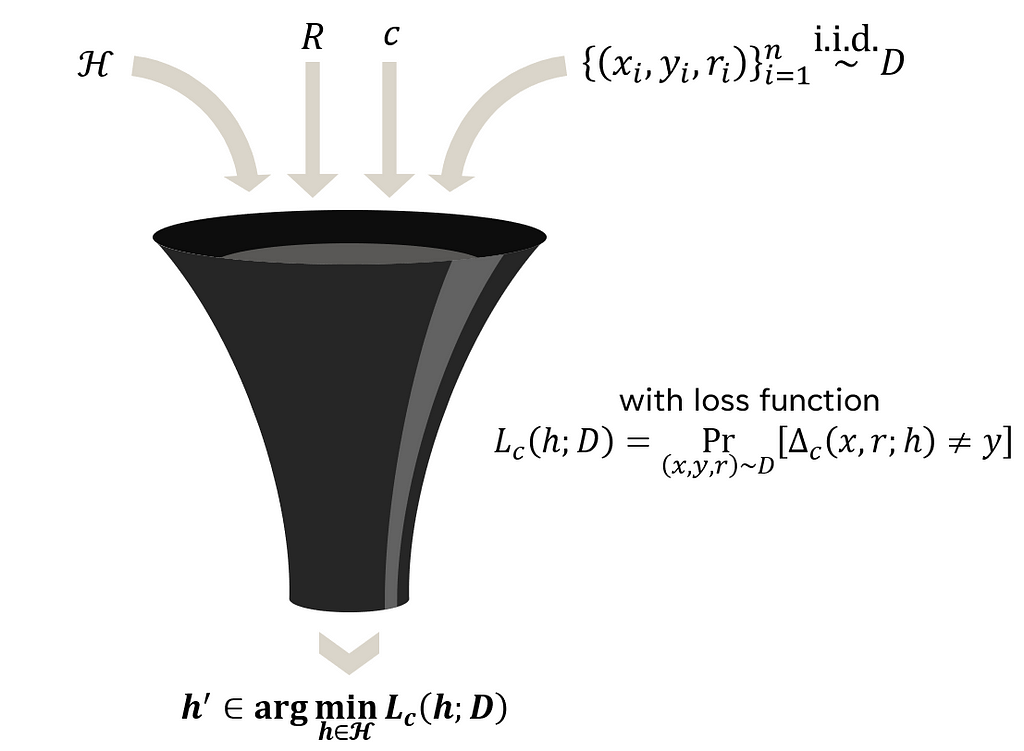

Lastly, we’ve laid all the mandatory groundwork to formally outline the strategic classification drawback.

Given a speculation class H, a desire class R, a value perform c, and a set of n information factors drawn from a distribution D, we need to discover a binary classifier h’ that minimizes the loss as outlined within the diagram above. Be aware that the loss is just a modification of the canonical zero-one loss, plugging within the information level finest response as an alternative of h(x).

Conclusion

Ranging from the canonical binary classification setup, we launched the notion of desire lessons. Subsequent, we noticed how one can formalize that notion utilizing an r worth for every information level. We then noticed how value features complement information level preferences. After that, we broke down an instance earlier than defining the important thing idea of information level finest response based mostly on the concepts we explored beforehand. Lastly, we used the information level finest response to outline the modified zero-one loss used within the definition of the strategic classification drawback.

Be part of me subsequent time as I outline and clarify the strategic VC dimension, which is the pure subsequent step from the place we left off this time.

References

[1] R. Sundaram, A. Vullikanti, H. Xu, F. Yao. PAC-Studying for Strategic Classification (2021), Worldwide Convention on Machine Studying.

Extending PAC Studying to a Strategic Classification Setting was initially revealed in In direction of Knowledge Science on Medium, the place persons are persevering with the dialog by highlighting and responding to this story.

[ad_2]

Supply hyperlink