Step-by-Step Workflow for creating and refining an AI Agent whereas coping with errors

After we take into consideration the way forward for AI, we envision intuitive on a regular basis helpers seamlessly integrating into our workflows and taking over advanced, routinely duties. All of us have discovered touchpoints that relieve us from the tedium of psychological routine work. But, the primary duties at the moment tackled contain textual content creation, correction, and brainstorming, underlined by the numerous function RAG (Retrieval-Augmented Technology) pipelines play in ongoing improvement. We goal to offer Massive Language Fashions with higher context to generate extra worthwhile content material.

Fascinated about the way forward for AI conjures photos of Jarvis from Iron Man or Rasputin from Future (the sport) for me. In each examples, the AI acts as a voice-controlled interface to a fancy system, providing high-level abstractions. As an illustration, Tony Stark makes use of it to handle his analysis, conduct calculations, and run simulations. Even R2D2 can reply to voice instructions to interface with unfamiliar pc methods and extract information or work together with constructing methods.

In these eventualities, AI permits interplay with advanced methods with out requiring the top person to have a deep understanding of them. This may very well be likened to an ERP system in a big company at this time. It’s uncommon to seek out somebody in a big company who totally is aware of and understands each side of the in-house ERP system. It’s not far-fetched to think about that, within the close to future, AI may help practically each interplay with an ERP system. From the top person managing buyer information or logging orders to the software program developer fixing bugs or implementing new options, these interactions might quickly be facilitated by AI assistants aware of all features and processes of the ERP system. Such an AI assistant would know which database to enter buyer information into and which processes and code may be related to a bug.

To attain this, a number of challenges and improvements lie forward. We have to rethink processes and their documentation. Immediately’s ERP processes are designed for human use, with particular roles for various customers, documentation for people, enter masks for people, and person interactions designed to be intuitive and error-free. The design of those features will look completely different for AI interactions. We’d like particular roles for AI interactions and completely different course of designs to allow intuitive and error-free AI interplay. That is already evident in our work with prompts. What we contemplate to be a transparent process usually seems to not be so simple.

From Idea to Actuality: Constructing the Foundation for AI Brokers

Nonetheless, let’s first take a step again to the idea of brokers. Brokers, or AI assistants that may carry out duties utilizing the instruments supplied and make choices on how one can use these instruments, are the constructing blocks that would finally allow such a system. They’re the method parts we’d need to combine into each side of a fancy system. However as highlighted in a earlier article, they’re difficult to deploy reliably. On this article, I’ll exhibit how we will design and optimize an agent able to reliably interacting with a database.

Whereas the grand imaginative and prescient of AI’s future is inspiring, it’s essential to take sensible steps in the direction of realizing this imaginative and prescient. To exhibit how we will begin constructing the inspiration for such superior AI methods, let’s deal with making a prototype agent for a standard process: expense monitoring. This prototype will function a tangible instance of how AI can help in managing monetary transactions effectively, showcasing the potential of AI in automating routine duties and highlighting the challenges and issues concerned in designing an AI system that interacts seamlessly with databases. By beginning with a particular and relatable use case, we will acquire worthwhile insights that may inform the event of extra advanced AI brokers within the future.

The Purpose of This Article

This text will lay the groundwork for a collection of articles geared toward creating a chatbot that may function a single level of interplay for a small enterprise to help and execute enterprise processes or a chatbot that in your private life organizes the whole lot you must maintain monitor of. From information, routines, recordsdata, to footage, we need to merely chat with our Assistant, permitting it to determine the place to retailer and retrieve your information.

Transitioning from the grand imaginative and prescient of AI’s future to sensible purposes, let’s zoom in on making a prototype agent. This agent will function a foundational step in the direction of realizing the bold objectives mentioned earlier. We’ll embark on creating an “Expense Monitoring” agent, a simple but important process, demonstrating how AI can help in managing monetary transactions effectively.

This “Expense Monitoring” prototype won’t solely showcase the potential of AI in automating routine duties but additionally illuminate the challenges and issues concerned in designing an AI system that interacts seamlessly with databases. By specializing in this instance, we will discover the intricacies of agent design, enter validation, and the combination of AI with present methods — laying a stable basis for extra advanced purposes within the future.

1. Arms-On: Testing OpenAI Software Name

To convey our prototype agent to life and determine potential bottlenecks, we’re venturing into testing the device name performance of OpenAI. Beginning with a primary instance of expense monitoring, we’re laying down a foundational piece that mimics a real-world software. This stage includes making a base mannequin and remodeling it into the OpenAI device schema utilizing the langchain library’s convert_to_openai_tool perform. Moreover, crafting a report_tool permits our future agent to speak outcomes or spotlight lacking data or points:

from pydantic.v1 import BaseModel, validator

from datetime import datetime

from langchain_core.utils.function_calling import convert_to_openai_tool

class Expense(BaseModel):

description: str

net_amount: float

gross_amount: float

tax_rate: float

date: datetime

class Report(BaseModel):

report: str

add_expense_tool = convert_to_openai_tool(Expense)

report_tool = convert_to_openai_tool(Report)

With the information mannequin and instruments arrange, the subsequent step is to make use of the OpenAI shopper SDK to provoke a easy device name. On this preliminary take a look at, we’re deliberately offering inadequate data to the mannequin to see if it might appropriately point out what’s lacking. This strategy not solely exams the practical functionality of the agent but additionally its interactive and error-handling capacities.

Calling OpenAI API

Now, we’ll use the OpenAI shopper SDK to provoke a easy device name. In our first take a look at, we intentionally present the mannequin with inadequate data to see if it might notify us of the lacking particulars.

from openai import OpenAI

from langchain_core.utils.function_calling import convert_to_openai_tool

SYSTEM_MESSAGE = """You might be tasked with finishing particular goals and

should report the outcomes. At your disposal, you've quite a lot of instruments,

every specialised in performing a definite kind of process.

For profitable process completion:

Thought: Think about the duty at hand and decide which device is greatest suited

based mostly on its capabilities and the character of the work.

Use the report_tool with an instruction detailing the outcomes of your work.

In the event you encounter a problem and can't full the duty:

Use the report_tool to speak the problem or cause for the

process's incompletion.

You'll obtain suggestions based mostly on the outcomes of

every device's process execution or explanations for any duties that

couldn't be accomplished. This suggestions loop is essential for addressing

and resolving any points by strategically deploying the accessible instruments.

"""

user_message = "I've spend 5$ on a espresso at this time please monitor my expense. The tax fee is 0.2."

shopper = OpenAI()

model_name = "gpt-3.5-turbo-0125"

messages = [

{"role":"system", "content": SYSTEM_MESSAGE},

{"role":"user", "content": user_message}

]

response = shopper.chat.completions.create(

mannequin=model_name,

messages=messages,

instruments=[

convert_to_openai_tool(Expense),

convert_to_openai_tool(ReportTool)]

)

Subsequent, we’ll want a brand new perform to learn the arguments of the perform name from the response:

def parse_function_args(response):

message = response.decisions[0].message

return json.masses(message.tool_calls[0].perform.arguments)

print(parse_function_args(response))

{'description': 'Espresso',

'net_amount': 5,

'gross_amount': None,

'tax_rate': 0.2,

'date': '2023-10-06T12:00:00Z'}

As we will observe, now we have encountered a number of points within the execution:

- The gross_amount just isn’t calculated.

- The date is hallucinated.

With that in thoughts. Let’s attempt to resolve this points and optimize our agent workflow.

2. Optimize Software dealing with

To optimize the agent workflow, I discover it essential to prioritize workflow over immediate engineering. Whereas it may be tempting to fine-tune the immediate in order that the agent learns to make use of the instruments supplied completely and makes no errors, it’s extra advisable to first modify the instruments and processes. When a typical error happens, the preliminary consideration must be how one can repair it code-based.

Dealing with Lacking Data

Dealing with lacking data successfully is a necessary matter for creating strong and dependable brokers. Within the earlier instance, offering the agent with a device like “get_current_date” is a workaround for particular eventualities. Nonetheless, we should assume that lacking data will happen in varied contexts, and we can’t rely solely on immediate engineering and including extra instruments to forestall the mannequin from hallucinating lacking data.

A easy workaround for this state of affairs is to switch the device schema to deal with all parameters as non-obligatory. This strategy ensures that the agent solely submits the parameters it is aware of, stopping pointless hallucination.

Subsequently, let’s check out openai device schema:

add_expense_tool = convert_to_openai_tool(Expense)

print(add_expense_tool)

{'kind': 'perform',

'perform': {'title': 'Expense',

'description': '',

'parameters': {'kind': 'object',

'properties': {'description': {'kind': 'string'},

'net_amount': {'kind': 'quantity'},

'gross_amount': {'kind': 'quantity'},

'tax_rate': {'kind': 'quantity'},

'date': {'kind': 'string', 'format': 'date-time'}},

'required': ['description',

'net_amount',

'gross_amount',

'tax_rate',

'date']}}}

As we will see now we have particular key required , which we have to take away. Right here’s how one can modify the add_expense_tool schema to make parameters non-obligatory by eradicating the required key:

del add_expense_tool["function"]["parameters"]["required"]

Designing Software class

Subsequent, we will design a Software class that originally checks the enter parameters for lacking values. We create the Software class with two strategies: .run(), .validate_input(), and a property openai_tool_schema, the place we manipulate the device schema by eradicating required parameters. Moreover, we outline the ToolResult BaseModel with the fields content material and success to function the output object for every device run.

from pydantic import BaseModel

from typing import Sort, Callable, Dict, Any, Listing

class ToolResult(BaseModel):

content material: str

success: bool

class Software(BaseModel):

title: str

mannequin: Sort[BaseModel]

perform: Callable

validate_missing: bool = False

class Config:

arbitrary_types_allowed = True

def run(self, **kwargs) -> ToolResult:

if self.validate_missing:

missing_values = self.validate_input(**kwargs)

if missing_values:

content material = f"Lacking values: {', '.be a part of(missing_values)}"

return ToolResult(content material=content material, success=False)

consequence = self.perform(**kwargs)

return ToolResult(content material=str(consequence), success=True)

def validate_input(self, **kwargs) -> Listing[str]:

missing_values = []

for key in self.mannequin.__fields__.keys():

if key not in kwargs:

missing_values.append(key)

return missing_values

@property

def openai_tool_schema(self) -> Dict[str, Any]:

schema = convert_to_openai_tool(self.mannequin)

if "required" in schema["function"]["parameters"]:

del schema["function"]["parameters"]["required"]

return schema

The Software class is a vital part within the AI agent's workflow, serving as a blueprint for creating and managing varied instruments that the agent can make the most of to carry out particular duties. It’s designed to deal with enter validation, execute the device's perform, and return the lead to a standardized format.

The Software class key parts:

- title: The title of the device.

- mannequin: The Pydantic BaseModel that defines the enter schema for the device.

- perform: The callable perform that the device executes.

- validate_missing: A boolean flag indicating whether or not to validate lacking enter values (default is False).

The Software class two primary strategies:

- run(self, **kwargs) -> ToolResult: This methodology is liable for executing the device’s perform with the supplied enter arguments. It first checks if validate_missing is about to True. In that case, it calls the validate_input() methodology to examine for lacking enter values. If any lacking values are discovered, it returns a ToolResult object with an error message and success set to False. If all required enter values are current, it proceeds to execute the device's perform with the supplied arguments and returns a ToolResult object with the consequence and success set to True.

- validate_input(self, **kwargs) -> Listing[str]: This methodology compares the enter arguments handed to the device with the anticipated enter schema outlined within the mannequin. It iterates over the fields outlined within the mannequin and checks if every discipline is current within the enter arguments. If any discipline is lacking, it appends the sector title to a listing of lacking values. Lastly, it returns the checklist of lacking values.

The Software class additionally has a property referred to as openai_tool_schema, which returns the OpenAI device schema for the device. It makes use of the convert_to_openai_tool() perform to transform the mannequin to the OpenAI device schema format. Moreover, it removes the "required" key from the schema, making all enter parameters non-obligatory. This permits the agent to offer solely the accessible data with out the necessity to hallucinate lacking values.

By encapsulating the device’s performance, enter validation, and schema era, the Software class gives a clear and reusable interface for creating and managing instruments within the AI agent's workflow. It abstracts away the complexities of dealing with lacking values and ensures that the agent can gracefully deal with incomplete data whereas executing the suitable instruments based mostly on the accessible enter.

Testing Lacking Data Dealing with

Subsequent, we’ll prolong our OpenAI API name. We wish the shopper to make the most of our device, and our response object to straight set off a device.run(). For this, we have to initialize our instruments in our newly created Software class. We outline two dummy features which return successful message string.

def add_expense_func(**kwargs):

return f"Added expense: {kwargs} to the database."

add_expense_tool = Software(

title="add_expense_tool",

mannequin=Expense,

perform=add_expense_func

)

def report_func(report: str = None):

return f"Reported: {report}"

report_tool = Software(

title="report_tool",

mannequin=ReportTool,

perform=report_func

)

instruments = [add_expense_tool, report_tool]

Subsequent we outline our helper perform, that every take shopper response as enter an assist to work together with out instruments.

def get_tool_from_response(response, instruments=instruments):

tool_name = response.decisions[0].message.tool_calls[0].perform.title

for t in instruments:

if t.title == tool_name:

return t

increase ValueError(f"Software {tool_name} not present in instruments checklist.")

def parse_function_args(response):

message = response.decisions[0].message

return json.masses(message.tool_calls[0].perform.arguments)

def run_tool_from_response(response, instruments=instruments):

device = get_tool_from_response(response, instruments)

tool_kwargs = parse_function_args(response)

return device.run(**tool_kwargs)

Now, we will execute our shopper with our new instruments and use the run_tool_from_response perform.

response = shopper.chat.completions.create(

mannequin=model_name,

messages=messages,

instruments=[tool.openai_tool_schema for tool in tools]

)

tool_result = run_tool_from_response(response, instruments=instruments)

print(tool_result)

content material='Lacking values: gross_amount, date' success=False

Completely, we now see our device indicating that lacking values are current. Because of our trick of sending all parameters as non-obligatory, we now keep away from hallucinated parameters.

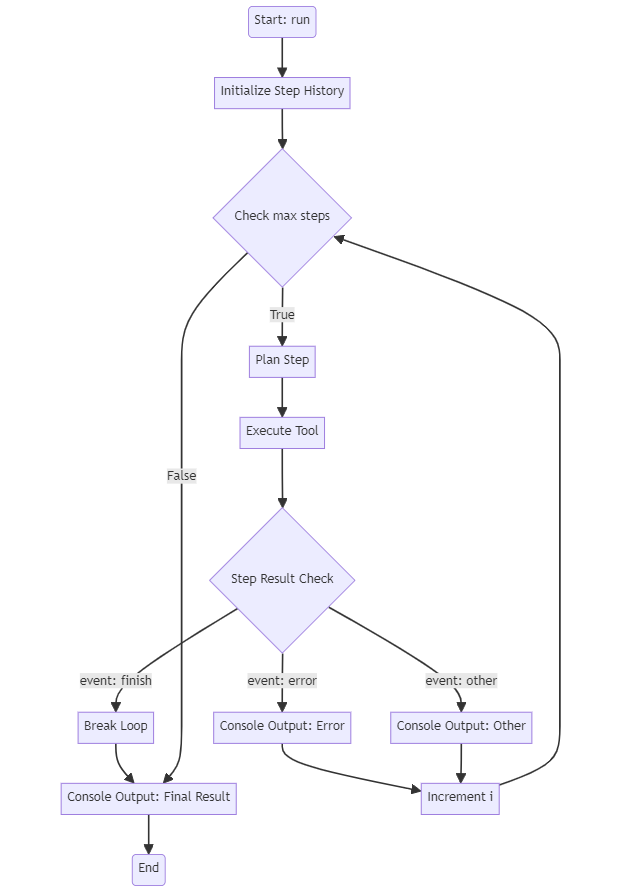

3. Constructing the Agent Workflow

Our course of, because it stands, doesn’t but symbolize a real agent. To this point, we’ve solely executed a single API device name. To rework this into an agent workflow, we have to introduce an iterative course of that feeds the outcomes of device execution again to the shopper. The fundamental course of ought to like this:

Let’s get began by creating a brand new OpenAIAgent class:

class StepResult(BaseModel):

occasion: str

content material: str

success: bool

class OpenAIAgent:

def __init__(

self,

instruments: checklist[Tool],

shopper: OpenAI,

system_message: str = SYSTEM_MESSAGE,

model_name: str = "gpt-3.5-turbo-0125",

max_steps: int = 5,

verbose: bool = True

):

self.instruments = instruments

self.shopper = shopper

self.model_name = model_name

self.system_message = system_message

self.step_history = []

self.max_steps = max_steps

self.verbose = verbose

def to_console(self, tag: str, message: str, shade: str = "inexperienced"):

if self.verbose:

color_prefix = Fore.__dict__[color.upper()]

print(color_prefix + f"{tag}: {message}{Model.RESET_ALL}")

Like our ToolResultobject, we’ve outlined a StepResult as an object for every agent step. We then outlined the __init__ methodology of the OpenAIAgent class and a to_console() methodology to print our intermediate steps and power calls to the console, utilizing colorama for colourful printouts. Subsequent, we outline the guts of the agent, the run() and the run_step() methodology.

class OpenAIAgent:

# ... __init__...

# ... to_console ...

def run(self, user_input: str):

openai_tools = [tool.openai_tool_schema for tool in self.tools]

self.step_history = [

{"role":"system", "content":self.system_message},

{"role":"user", "content":user_input}

]

step_result = None

i = 0

self.to_console("START", f"Beginning Agent with Enter: {user_input}")

whereas i < self.max_steps:

step_result = self.run_step(self.step_history, openai_tools)

if step_result.occasion == "end":

break

elif step_result.occasion == "error":

self.to_console(step_result.occasion, step_result.content material, "purple")

else:

self.to_console(step_result.occasion, step_result.content material, "yellow")

i += 1

self.to_console("Ultimate Outcome", step_result.content material, "inexperienced")

return step_result.content material

Within the run() methodology, we begin by initializing the step_history, which can function our message reminiscence, with the predefined system_message and the user_input. Then we begin our whereas loop, the place we name run_step throughout every iteration, which can return a StepResult Object. We determine if the agent completed his process or if an error occurred, which can be handed to the console as nicely.

class OpenAIAgent:

# ... __init__...

# ... to_console ...

# ... run ...

def run_step(self, messages: checklist[dict], instruments):

# plan the subsequent step

response = self.shopper.chat.completions.create(

mannequin=self.model_name,

messages=messages,

instruments=instruments

)

# add message to historical past

self.step_history.append(response.decisions[0].message)

# examine if device name is current

if not response.decisions[0].message.tool_calls:

return StepResult(

occasion="Error",

content material="No device calls have been returned.",

success=False

)

tool_name = response.decisions[0].message.tool_calls[0].perform.title

tool_kwargs = parse_function_args(response)

# execute the device name

self.to_console(

"Software Name", f"Identify: {tool_name}nArgs: {tool_kwargs}", "magenta"

)

tool_result = run_tool_from_response(response, instruments=self.instruments)

tool_result_msg = self.tool_call_message(response, tool_result)

self.step_history.append(tool_result_msg)

if tool_result.success:

step_result = StepResult(

occasion="tool_result",

content material=tool_result.content material,

success=True)

else:

step_result = StepResult(

occasion="error",

content material=tool_result.content material,

success=False

)

return step_result

def tool_call_message(self, response, tool_result: ToolResult):

tool_call = response.decisions[0].message.tool_calls[0]

return {

"tool_call_id": tool_call.id,

"function": "device",

"title": tool_call.perform.title,

"content material": tool_result.content material,

}

Now we’ve outlined the logic for every step. We first receive a response object by our beforehand examined shopper API name with instruments. We append the response message object to our step_history. We then confirm if a device name is included in our response object, in any other case, we return an error in our StepResult. Then we log our device name to the console and run the chosen device with our beforehand outlined methodology run_tool_from_response(). We additionally have to append the device consequence to our message historical past. OpenAI has outlined a particular format for this function, in order that the Mannequin is aware of which device name refers to which output by passing a tool_call_id into our message dict. That is finished by our methodology tool_call_message(), which takes the response object and the tool_result as enter arguments. On the finish of every step, we assign the device consequence to a StepResult Object, which additionally signifies if the step was profitable or not, and return it to our loop in run().

4. Working the Agent

Now we will take a look at our agent with the earlier instance, straight equipping it with a get_current_date_tool as nicely. Right here, we will set our beforehand outlined validate_missing attribute to False, for the reason that device doesn't want any enter argument.

class DateTool(BaseModel):

x: str = None

get_date_tool = Software(

title="get_current_date",

mannequin=DateTool,

perform=lambda: datetime.now().strftime("%Y-%m-%d"),

validate_missing=False

)

instruments = [

add_expense_tool,

report_tool,

get_date_tool

]

agent = OpenAIAgent(instruments, shopper)

agent.run("I've spent 5$ on a espresso at this time please monitor my expense. The tax fee is 0.2.")

START: Beginning Agent with Enter:

"I've spend 5$ on a espresso at this time please monitor my expense. The tax fee is 0.2."

Software Name: get_current_date

Args: {}

tool_result: 2024-03-15

Software Name: add_expense_tool

Args: {'description': 'Espresso expense', 'net_amount': 5, 'tax_rate': 0.2, 'date': '2024-03-15'}

error: Lacking values: gross_amount

Software Name: add_expense_tool

Args: {'description': 'Espresso expense', 'net_amount': 5, 'tax_rate': 0.2, 'date': '2024-03-15', 'gross_amount': 6}

tool_result: Added expense: {'description': 'Espresso expense', 'net_amount': 5, 'tax_rate': 0.2, 'date': '2024-03-15', 'gross_amount': 6} to the database.

Error: No device calls have been returned.

Software Name: Identify: report_tool

Args: {'report': 'Expense efficiently tracked for espresso buy.'}

tool_result: Reported: Expense efficiently tracked for espresso buy.

Ultimate Outcome: Reported: Expense efficiently tracked for espresso buy.

Following the profitable execution of our prototype agent, it’s noteworthy to emphasise how successfully the agent utilized the designated instruments based on plan. Initially, it invoked the get_current_date_tool, establishing a foundational timestamp for the expense entry. Subsequently, when trying to log the expense through the add_expense_tool, our intelligently designed device class recognized a lacking gross_amount—a vital piece of knowledge for correct monetary monitoring. Impressively, the agent autonomously resolved this by calculating the gross_amount utilizing the supplied tax_rate.

It’s necessary to say that in our take a look at run, the character of the enter expense — whether or not the $5 spent on espresso was web or gross — wasn’t explicitly specified. At this juncture, such specificity wasn’t required for the agent to carry out its process efficiently. Nonetheless, this brings to mild a worthwhile perception for refining our agent’s understanding and interplay capabilities: Incorporating such detailed data into our preliminary system immediate might considerably improve the agent’s accuracy and effectivity in processing expense entries. This adjustment would guarantee a extra complete grasp of economic information proper from the outset.

Key Takeaways

- Iterative Improvement: The undertaking underscores the vital nature of an iterative improvement cycle, fostering steady enchancment by suggestions. This strategy is paramount in AI, the place variability is the norm, necessitating an adaptable and responsive improvement technique.

- Dealing with Uncertainty: Our journey highlighted the importance of elegantly managing ambiguities and errors. Improvements comparable to non-obligatory parameters and rigorous enter validation have confirmed instrumental in enhancing each the reliability and person expertise of the agent.

- Custom-made Agent Workflows for Particular Duties: A key perception from this work is the significance of customizing agent workflows to go well with specific use circumstances. Past assembling a set of instruments, the strategic design of device interactions and responses is significant. This customization ensures the agent successfully addresses particular challenges, resulting in a extra targeted and environment friendly problem-solving strategy.

The journey now we have embarked upon is just the start of a bigger exploration into the world of AI brokers and their purposes in varied domains. As we proceed to push the boundaries of what’s potential with AI, we invite you to hitch us on this thrilling journey. By constructing upon the inspiration laid on this article and staying tuned for the upcoming enhancements, you’ll witness firsthand how AI brokers can revolutionize the way in which companies and people deal with their information and automate advanced duties.

Collectively, allow us to embrace the facility of AI and unlock its potential to remodel the way in which we work and work together with expertise. The way forward for AI is vivid, and we’re on the forefront of shaping it, one dependable agent at a time.

Trying Forward

As we proceed our journey in exploring the potential of AI brokers, the upcoming articles will deal with increasing the capabilities of our prototype and integrating it with real-world methods. Within the subsequent article, we’ll dive into designing a sturdy undertaking construction that permits our agent to work together seamlessly with SQL databases. By leveraging the agent developed on this article, we’ll exhibit how AI can effectively handle and manipulate information saved in databases, opening up a world of potentialities for automating data-related duties.

Constructing upon this basis, the third article within the collection will introduce superior question options, enabling our agent to deal with extra advanced information retrieval and manipulation duties. We may even discover the idea of a routing agent, which can act as a central hub for managing a number of subagents, every liable for interacting with particular database tables. This hierarchical construction will permit customers to make requests in pure language, which the routing agent will then interpret and direct to the suitable subagent for execution.

To additional improve the practicality and safety of our AI-powered system, we’ll introduce a role-based entry management system. It will make sure that customers have the suitable permissions to entry and modify information based mostly on their assigned roles. By implementing this function, we will exhibit how AI brokers may be deployed in real-world eventualities whereas sustaining information integrity and safety.

By way of these upcoming enhancements, we goal to showcase the true potential of AI brokers in streamlining information administration processes and offering a extra intuitive and environment friendly method for customers to work together with databases. By combining the facility of pure language processing, database administration, and role-based entry management, we can be laying the groundwork for the event of subtle AI assistants that may revolutionize the way in which companies and people deal with their information.

Keep tuned for these thrilling developments as we proceed to push the boundaries of what’s potential with AI brokers in information administration and past.

Supply Code

Moreover, your complete supply code for the initiatives coated is accessible on GitHub. You may entry it at https://github.com/elokus/AgentDemo.

Leverage OpenAI Software calling: Constructing a dependable AI Agent from Scratch was initially printed in In direction of Knowledge Science on Medium, the place persons are persevering with the dialog by highlighting and responding to this story.

![[2409.12947] Unrolled denoising networks provably study optimum Bayesian inference](https://i0.wp.com/arxiv.org/static/browse/0.3.4/images/arxiv-logo-fb.png?w=218&resize=218,150&ssl=1)