Music era fashions have emerged as highly effective instruments that rework pure language textual content into musical compositions. Originating from developments in synthetic intelligence (AI) and deep studying, these fashions are designed to know and translate descriptive textual content into coherent, aesthetically pleasing music. Their capacity to democratize music manufacturing permits people with out formal coaching to create high-quality music by merely describing their desired outcomes.

Generative AI fashions are revolutionizing music creation and consumption. Firms can make the most of this expertise to develop new merchandise, streamline processes, and discover untapped potential, yielding vital enterprise impression. Such music era fashions allow numerous functions, from personalised soundtracks for multimedia and gaming to instructional assets for college kids exploring musical types and buildings. It assists artists and composers by offering new concepts and compositions, fostering creativity and collaboration.

One distinguished instance of a music era mannequin is AudioCraft MusicGen by Meta. MusicGen code is launched below MIT, mannequin weights are launched below CC-BY-NC 4.0. MusicGen can create music primarily based on textual content or melody inputs, providing you with higher management over the output. The next diagram reveals how MusicGen, a single stage auto-regressive Transformer mannequin, can generate high-quality music primarily based on textual content descriptions or audio prompts.

MusicGen makes use of cutting-edge AI expertise to generate numerous musical types and genres, catering to numerous inventive wants. Not like conventional strategies that embrace cascading a number of fashions, equivalent to hierarchically or upsampling, MusicGen operates as a single language mannequin, which operates over a number of streams of compressed discrete music illustration (tokens). This streamlined method empowers customers with exact management over producing high-quality mono and stereo samples tailor-made to their preferences, revolutionizing AI-driven music composition.

MusicGen fashions can be utilized throughout schooling, content material creation, and music composition. They will allow college students to experiment with numerous musical types, generate customized soundtracks for multimedia tasks, and create personalised music compositions. Moreover, MusicGen can help musicians and composers, fostering creativity and innovation.

This put up demonstrates methods to deploy MusicGen, a music era mannequin on Amazon SageMaker utilizing asynchronous inference. We particularly deal with textual content conditioned era of music samples utilizing MusicGen fashions.

Resolution overview

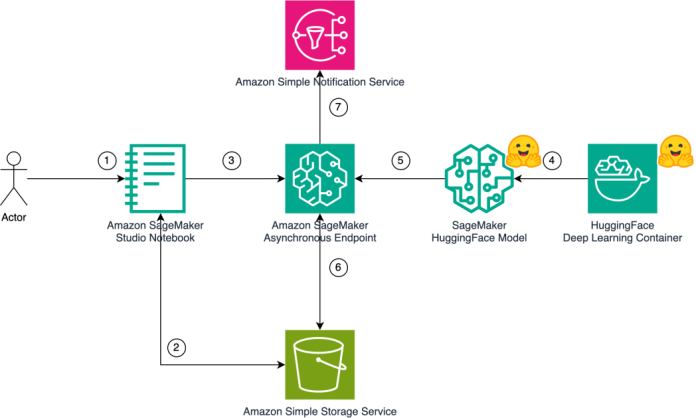

With the power to generate audio, music, or video, generative AI fashions might be computationally intensive and time-consuming. Generative AI fashions with audio, music, and video output can use asynchronous inference that queues incoming requests and course of them asynchronously. Our answer entails deploying the AudioCraft MusicGen mannequin on SageMaker utilizing SageMaker endpoints for asynchronous inference. This entails deploying AudioCraft MusicGen fashions sourced from the Hugging Face Mannequin Hub onto a SageMaker infrastructure.

The next answer structure diagram reveals how a person can generate music utilizing pure language textual content as an enter immediate through the use of AudioCraft MusicGen fashions deployed on SageMaker.

The next steps element the sequence occurring within the workflow from the second the person enters the enter to the purpose the place music is generated as output:

- The person invokes the SageMaker asynchronous endpoint utilizing an Amazon SageMaker Studio pocket book.

- The enter payload is uploaded to an Amazon Easy Storage Service (Amazon S3) bucket for inference. The payload consists of each the immediate and the music era parameters. The generated music can be downloaded from the S3 bucket.

- The

fb/musicgen-largemannequin is deployed to a SageMaker asynchronous endpoint. This endpoint is used to deduce for music era. - The HuggingFace Inference Containers picture is used as a base picture. We use a picture that helps PyTorch 2.1.0 with a Hugging Face Transformers framework.

- The SageMaker

HuggingFaceModelis deployed to a SageMaker asynchronous endpoint. - The Hugging Face mannequin (

fb/musicgen-large) is uploaded to Amazon S3 throughout deployment. Additionally, throughout inference, the generated outputs are uploaded to Amazon S3. - We use Amazon Easy Notification Service (Amazon SNS) subjects to inform the success and failure as outlined as part of SageMaker asynchronous inference configuration.

Stipulations

Be sure to have the next stipulations in place :

- Affirm you will have entry to the AWS Administration Console to create and handle assets in SageMaker, AWS Id and Entry Administration (IAM), and different AWS companies.

- When you’re utilizing SageMaker Studio for the primary time, create a SageMaker area. Check with Fast setup to Amazon SageMaker to create a SageMaker area with default settings.

- Receive the AWS Deep Studying Containers for Giant Mannequin Inference from pre-built HuggingFace Inference Containers.

Deploy the answer

To deploy the AudioCraft MusicGen mannequin to a SageMaker asynchronous inference endpoint, full the next steps:

- Create a mannequin serving package deal for MusicGen.

- Create a Hugging Face mannequin.

- Outline asynchronous inference configuration.

- Deploy the mannequin on SageMaker.

We element every of the steps and present how we will deploy the MusicGen mannequin onto SageMaker. For sake of brevity, solely vital code snippets are included. The complete supply code for deploying the MusicGen mannequin is offered within the GitHub repo.

Create a mannequin serving package deal for MusicGen

To deploy MusicGen, we first create a mannequin serving package deal. The mannequin package deal incorporates a necessities.txt file that lists the mandatory Python packages to be put in to serve the MusicGen mannequin. The mannequin package deal additionally incorporates an inference.py script that holds the logic for serving the MusicGen mannequin.

Let’s take a look at the important thing capabilities utilized in serving the MusicGen mannequin for inference on SageMaker:

The model_fn perform hundreds the MusicGen mannequin fb/musicgen-large from the Hugging Face Mannequin Hub. We depend on the MusicgenForConditionalGeneration Transformers module to load the pre-trained MusicGen mannequin.

You can too seek advice from musicgen-large-load-from-s3/deploy-musicgen-large-from-s3.ipynb, which demonstrates the very best apply of downloading the mannequin from the Hugging Face Hub to Amazon S3 and reusing the mannequin artifacts for future deployments. As a substitute of downloading the mannequin each time from Hugging Face once we deploy or when scaling occurs, we obtain the mannequin to Amazon S3 and reuse it for deployment and through scaling actions. Doing so can enhance the obtain velocity, particularly for giant fashions, thereby serving to forestall the obtain from occurring over the web from an internet site exterior of AWS. This greatest apply additionally maintains consistency, which suggests the identical mannequin from Amazon S3 might be deployed throughout numerous staging and manufacturing environments.

The predict_fn perform makes use of the info supplied throughout the inference request and the mannequin loaded by means of model_fn:

Utilizing the data accessible within the knowledge dictionary, we course of the enter knowledge to acquire the immediate and era parameters used to generate the music. We focus on the era parameters in additional element later on this put up.

We load the mannequin to the system after which ship the inputs and era parameters as inputs to the mannequin. This course of generates the music within the type of a three-dimensional Torch tensor of form (batch_size, num_channels, sequence_length).

We then use the tensor to generate .wav music and add these information to Amazon S3 and clear up the .wav information saved on disk. We then acquire the S3 URI of the .wav information and ship them places within the response.

We now create the archive of the inference scripts and add these to the S3 bucket:

The uploaded URI of this object on Amazon S3 will later be used to create the Hugging Face mannequin.

Create the Hugging Face mannequin

Now we initialize HuggingFaceModel with the mandatory arguments. Throughout deployment, the mannequin serving artifacts, saved in s3_model_location, can be deployed. Earlier than the mannequin serving, the MusicGen mannequin can be downloaded from Hugging Face as per the logic in model_fn.

The env argument accepts a dictionary of parameters equivalent to TS_MAX_REQUEST_SIZE and TS_MAX_RESPONSE_SIZE, which outline the byte measurement values for request and response payloads to the asynchronous inference endpoint. The TS_DEFAULT_RESPONSE_TIMEOUT key within the env dictionary represents the timeout in seconds after which the asynchronous inference endpoint stops responding.

You may run MusicGen with the Hugging Face Transformers library from model 4.31.0 onwards. Right here we set transformers_version to 4.37. MusicGen requires at the least PyTorch model 2.1 or newest, and we’ve got set pytorch_version to 2.1.

Outline asynchronous inference configuration

Music era utilizing a textual content immediate as enter might be each computationally intensive and time-consuming. Asynchronous inference in SageMaker is designed to deal with these calls for. When working with music era fashions, it’s essential to notice that the method can typically take greater than 60 seconds to finish.

SageMaker asynchronous inference queues incoming requests and processes them asynchronously, making it very best for requests with massive payload sizes (as much as 1 GB), lengthy processing instances (as much as 1 hour), and close to real-time latency necessities. By queuing incoming requests and processing them asynchronously, this functionality effectively handles the prolonged processing instances inherent in music era duties. Furthermore, asynchronous inference allows seamless auto scaling, ensuring that assets are allotted solely when wanted, resulting in price financial savings.

Earlier than we proceed with asynchronous inference configuration , we create SNS subjects for achievement and failure that can be utilized to carry out downstream duties:

We now create an asynchronous inference endpoint configuration by specifying the AsyncInferenceConfig object:

The arguments to the AsyncInferenceConfig are detailed as follows:

- output_path – The placement the place the output of the asynchronous inference endpoint can be saved. The information on this location could have an .out extension and can include the small print of the asynchronous inference carried out by the MusicGen mannequin.

- notification_config – Optionally, you’ll be able to affiliate success and error SNS subjects. Dependent workflows can ballot these subjects to make knowledgeable selections primarily based on the inference outcomes.

Deploy the mannequin on SageMaker

With the asynchronous inference configuration outlined, we will deploy the Hugging Face mannequin, setting initial_instance_count to 1:

After efficiently deploying, you’ll be able to optionally configure automated scaling to the asynchronous endpoint. With asynchronous inference, you may as well scale down your asynchronous endpoint’s situations to zero.

We now dive into inferencing the asynchronous endpoint for music era.

Inference

On this part, we present methods to carry out inference utilizing an asynchronous inference endpoint with the MusicGen mannequin. For the sake of brevity, solely vital code snippets are included. The complete supply code for inferencing the MusicGen mannequin is offered within the GitHub repo. The next diagram explains the sequence of steps to invoke the asynchronous inference endpoint.

We element the steps to invoke the SageMaker asynchronous inference endpoint for MusicGen by prompting a desired temper in pure language utilizing English. We then display methods to obtain and play the .wav information generated from the person immediate. Lastly, we cowl the method of cleansing up the assets created as a part of this deployment.

Put together immediate and directions

For managed music era utilizing MusicGen fashions, it’s essential to know numerous era parameters:

From the previous code, let’s perceive the era parameters:

- guidance_scale – The

guidance_scaleis utilized in classifier-free steerage (CFG), setting the weighting between the conditional logits (predicted from the textual content prompts) and the unconditional logits (predicted from an unconditional or ‘null’ immediate). The next steerage scale encourages the mannequin to generate samples which can be extra carefully linked to the enter immediate, often on the expense of poorer audio high quality. CFG is enabled by settingguidance_scale > 1. For greatest outcomes, useguidance_scale = 3. Our deployment defaults to three. - max_new_tokens – The

max_new_tokensparameter specifies the variety of new tokens to generate. Era is proscribed by the sinusoidal positional embeddings to 30-second inputs, that means MusicGen can’t generate greater than 30 seconds of audio (1,503 tokens). Our deployment defaults to 256. - do_sample – The mannequin can generate an audio pattern conditioned on a textual content immediate by means of use of the

MusicgenProcessorto preprocess the inputs. The preprocessed inputs can then be handed to the.generatemethodology to generate text-conditional audio samples. Our deployment defaults toTrue. - temperature – That is the softmax temperature parameter. The next temperature will increase the randomness of the output, making it extra numerous. Our deployment defaults to 1.

Let’s take a look at methods to construct a immediate to deduce the MusicGen mannequin:

The previous code is the payload, which can be saved as a JSON file and uploaded to an S3 bucket. We then present the URI of the enter payload throughout the asynchronous inference endpoint invocation together with different arguments as follows.

The texts key accepts an array of texts, which can include the temper you wish to replicate in your generated music. You may embrace musical devices within the textual content immediate to the MusicGen mannequin to generate music that includes these devices.

The response from the invoke_endpoint_async is a dictionary of varied parameters:

OutputLocation within the response metadata represents Amazon S3 URI the place the inference response payload is saved.

Asynchronous music era

As quickly because the response metadata is shipped to the consumer, the asynchronous inference begins the music era. The music era occurs on the occasion chosen throughout the deployment of the MusicGen mannequin on the SageMaker asynchronous Inference endpoint , as detailed within the deployment part.

Steady polling and acquiring music information

Whereas the music era is in progress, we constantly ballot for the response metadata parameter OutputLocation:

The get_output perform retains polling for the presence of OutputLocation and returns the S3 URI of the .wav music file.

Audio output

Lastly, we obtain the information from Amazon S3 and play the output utilizing the next logic:

You now have entry to the .wav information and may attempt altering the era parameters to experiment with numerous textual content prompts.

The next is one other music pattern primarily based on the next era parameters:

Clear up

To keep away from incurring pointless prices, you’ll be able to clear up utilizing the next code:

The aforementioned cleanup routine will delete the SageMaker endpoint, endpoint configurations, and fashions related to MusicGen mannequin, so that you just keep away from incurring pointless prices. Make sure that to set cleanup variable to True, and exchange

Conclusion

On this put up, we realized methods to use SageMaker asynchronous inference to deploy the AudioCraft MusicGen mannequin. We began by exploring how the MusicGen fashions work and lined numerous use circumstances for deploying MusicGen fashions. We additionally explored how one can profit from capabilities equivalent to auto scaling and the mixing of asynchronous endpoints with Amazon SNS to energy downstream duties. We then took a deep dive into the deployment and inference workflow of MusicGen fashions on SageMaker, utilizing the AWS Deep Studying Containers for HuggingFace inference and the MusicGen mannequin sourced from the Hugging Face Hub.

Get began with producing music utilizing your inventive prompts by signing up for AWS. The complete supply code is offered on the official GitHub repository.

References

In regards to the Authors

Pavan Kumar Rao Navule is a Options Architect at Amazon Internet Providers, the place he works with ISVs in India to assist them innovate on the AWS platform. He’s specialised in architecting AI/ML and generative AI companies at AWS. Pavan is a printed creator for the e book “Getting Began with V Programming.” In his free time, Pavan enjoys listening to the nice magical voices of Sia and Rihanna.

Pavan Kumar Rao Navule is a Options Architect at Amazon Internet Providers, the place he works with ISVs in India to assist them innovate on the AWS platform. He’s specialised in architecting AI/ML and generative AI companies at AWS. Pavan is a printed creator for the e book “Getting Began with V Programming.” In his free time, Pavan enjoys listening to the nice magical voices of Sia and Rihanna.

David John Chakram is a Principal Options Architect at AWS. He makes a speciality of constructing knowledge platforms and architecting seamless knowledge ecosystems. With a profound ardour for databases, knowledge analytics, and machine studying, he excels at reworking advanced knowledge challenges into progressive options and driving companies ahead with data-driven insights.

David John Chakram is a Principal Options Architect at AWS. He makes a speciality of constructing knowledge platforms and architecting seamless knowledge ecosystems. With a profound ardour for databases, knowledge analytics, and machine studying, he excels at reworking advanced knowledge challenges into progressive options and driving companies ahead with data-driven insights.

Sudhanshu Hate is a principal AI/ML specialist with AWS and works with purchasers to advise them on their MLOps and generative AI journey. In his earlier position earlier than Amazon, he conceptualized, created, and led groups to construct ground-up open source-based AI and gamification platforms, and efficiently commercialized it with over 100 purchasers. Sudhanshu has to his credit score a few patents, has written two books and a number of other papers and blogs, and has introduced his factors of view in numerous technical boards. He has been a thought chief and speaker, and has been within the business for almost 25 years. He has labored with Fortune 1000 purchasers throughout the globe and most not too long ago with digital native purchasers in India.

Sudhanshu Hate is a principal AI/ML specialist with AWS and works with purchasers to advise them on their MLOps and generative AI journey. In his earlier position earlier than Amazon, he conceptualized, created, and led groups to construct ground-up open source-based AI and gamification platforms, and efficiently commercialized it with over 100 purchasers. Sudhanshu has to his credit score a few patents, has written two books and a number of other papers and blogs, and has introduced his factors of view in numerous technical boards. He has been a thought chief and speaker, and has been within the business for almost 25 years. He has labored with Fortune 1000 purchasers throughout the globe and most not too long ago with digital native purchasers in India.

Rupesh Bajaj is a Options Architect at Amazon Internet Providers, the place he collaborates with ISVs in India to assist them leverage AWS for innovation. He makes a speciality of offering steerage on cloud adoption by means of well-architected options and holds seven AWS certifications. With 5 years of AWS expertise, Rupesh can also be a Gen AI Ambassador. In his free time, he enjoys taking part in chess.

Rupesh Bajaj is a Options Architect at Amazon Internet Providers, the place he collaborates with ISVs in India to assist them leverage AWS for innovation. He makes a speciality of offering steerage on cloud adoption by means of well-architected options and holds seven AWS certifications. With 5 years of AWS expertise, Rupesh can also be a Gen AI Ambassador. In his free time, he enjoys taking part in chess.